Intro

I've never been quite sure how to interpret the Ultiworld power rankings. I, like all ultimate players, care only about my team's finish at nationals. Therefore I usually view Ultiworld power rankings as an imprecise yardstick by which to measure my team's chances at nationals. I am always happy to see my team rise in the rankings and disappointed to see it fall. But how precise is the yardstick? If my team is ranked near the bottom or not at all, how much should we fret (how destined are we to lose?); if my team is ranked near the top, how much should we rejoice (how assured are we of success?)?

To some degree, the rankings are accurate. If my team rises to #1 on the rankings, surely we are in a good spot to finish well at nationals; if my team falls from the rankings entirely, we will probably struggle at nationals, if we get there at all.

The time of year matters too: at the beginning of the season, the predictions are probably less accurate. The data provided by tournaments should increase the precision of rankings as nationals approaches.

I also wonder about rankings between divisions. Is the men's or women's division more accurate (or are they the same)? D1 or D3? College or club?

The approach

I'll dive in to a couple of these questions in this blog post. Hopefully one day I'll come back and expore more. We'll start with one basic case, and probably the best one: the men's and women's D1 college divisions. Using these divisions has a few advantages over other divisions:

- They have a long season. Over the season a steadily increasing amount of data is gathered about the teams through tournaments. The club season, in comparison, is shorter and has more sporadic tournament play

- Rosters are steady over the course of the season. In club, many rosters are smaller at early season tournaments, meaning the data gathered is less useful

- It is harder to predict solely from rosters in college than in club, meaning that the tournament data makes up a larger portion of the prediction [1]

- A larger number of Ultiworld contributors contribute to the D1 rankings than the D3 rankings, meaning they are a more stable average of people's opinions

We will use as our gold standard labels the placement of each team at nationals. This data is noisy as there's a lot of variance within a single tournament, but it is unbiased and we have plenty of data points between 16 teams x 8 seasons x 2 divisions. Nationals is all that anyone cares about, so it makes sense as our gold standard.

There are a few drawbacks with this approach. They are presented in bold alongside how we will choose to address them.

- Due to the bid system, there are teams at nationals outside of the top 20. We will ignore the bottom 4 finishing teams at nationals

- Ultiworld's power rankings are not meant to be predictive of nationals finish. Maybe. Ultiworld doesn't say on their site exactly what the power rankings are meant to convey, but it's likely that they are supposed to be predictions about the current strength of each team, ie if nationals was played on the day of the prediction. Even if they're not meant to be predictive we can still evaluate how predictive they are.

- What metric do we use to evaluate the accuracy of predictions? Spearman's ranking correlation coefficient (Spearman's rho) is a widely-used measure of accuracy of ordinal rankings. In our setting, it only differs from Mean Squared Error by a constant factor, so we'll use MSE, as it is easier to interpret.

- What about ties? We'll account for ties by setting the number to be the team's “correct” rank given the tie. For example, the four teams eliminated in quarterfinals who tie for 5th are each given a rank of 6.5 (halfway between 5th and 8th). Predictions of 5 and 8 are equally accurate for teams eliminated in quarters, as are predictions of 1 and 12.

- What about teams in Ultiworld's top 16 that don't qualify for nationals? We'll give them a ranking of 21. There's is a valid argument to be had that this should be a bigger penalty, but at the end of the day this is an arbitrary judgement call.

- What about the 2020 and 2021 college seasons? In 2020 nationals was cancelled. The 2021 series was a short season whose champions were largely determined by which teams were able to practice together and with other anomalies (essentially no regular season tournaments; uncertainty about school eligibility). We'll exclude both the 2020 and 2021 seasons.

- What about BYU? As BYU forfeits the series, we will remove them from the Ultiworld rankings.

Results

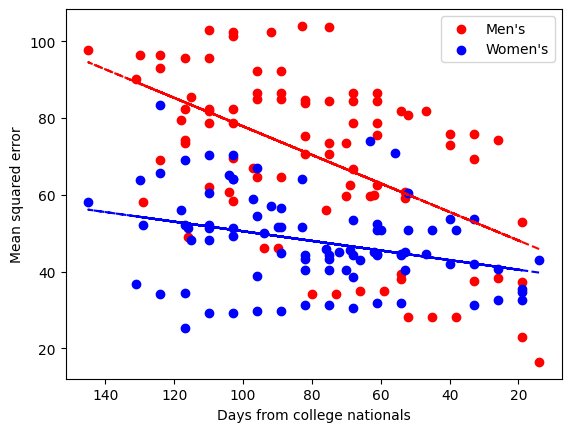

These assumptions allow us to look at the accuracy over time of predictions in Ultiworld's power rankings for men's and women's college teams from 2014 to 2023. We can scrape Ultiworld and run the numbers to generate the following graph. The graph is below, with men's in red and women's in blue.

There are a couple straightforward but interesting takeaways:

- Predictions in the women's division are generally a bit more accurate than the men's division

- In both divisions, predictions do get more accurate as nationals gets closer

- By nationals, predictions in the men's division are nearly as accurate as predictions in the women's division

That's about all I can learn from this graph.

Ultiworld vs USAU rankings

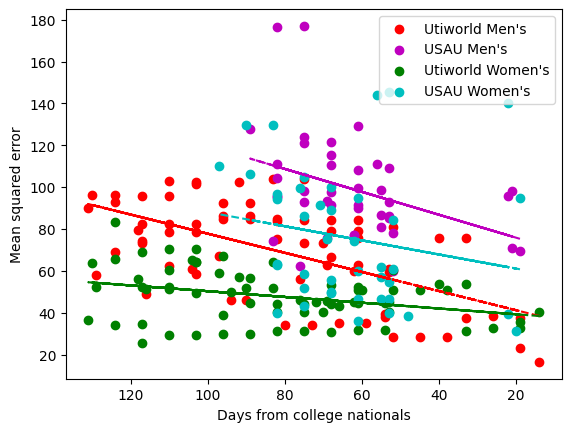

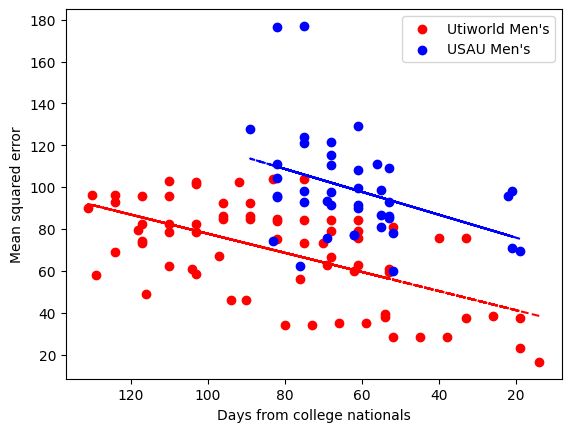

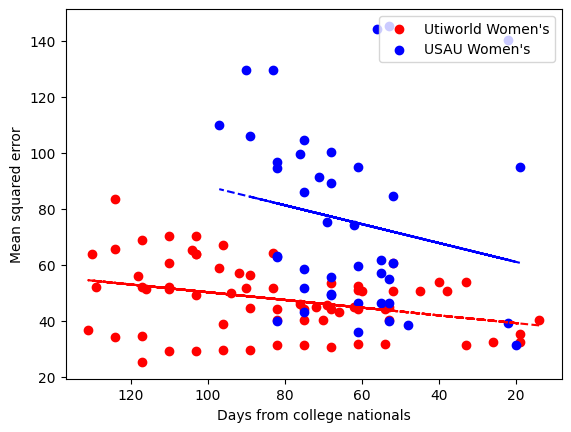

We can repeat this procedure to compare USAU rankings with Ultiworld rankings. There are a couple of drawbacks with the USAU data:

- USAU does not start ranking teams until the start of the spring season, so there are fewer rankings per season

- USAU did not calculate rankings for the 2022 season, so we only have 6 years of data

Even so, there should be enough data. Here are three graphs, one which contains both divisions and two that each contain one division.

Takeaways I see are:

- USAU's rankings are consistently less accurate than Ultiworld's rankings - through the whole season, for both divisions

- USAU's rankings get more accurate as the season goes on, as Ultiworld's do

- Both USAU and Ultiworld rankings are less predictive in the men's divsion. Thus it appears the women's division is more predictable

- USAU's accuracy at the end of the season is similar to Ultiworld's accuracy at the beginning of the season

Conclusion

There's a lot more to be done with this framework. A few ideas: how accurate is D1 vs D3; club vs college? How much stability is there in each different division, over the course of the season and over the course of multiple seasons? Does Ultiworld systematically over-rank or under-rank groups of teams such as blue-blood teams, teams new on the scene, or teams from specific regions? Is there a way we can use the USAU and Ultiworld rankings to come up with significantly more accurate predictions than either? Hopefully I'll one day follow up with blog posts exploring each those questions.

Resources

[1] This an unproven belief of mine. It would be good to follow up and compare the accuracy of the first ranking of the season of club teams vs the same of college teams.